Use cases

UWSim can be used in many different ways depending on the specific application. These are two possible use cases:

Validate perception and control algorithms

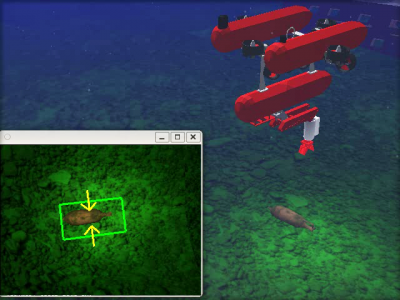

As UWSim supports virtual cameras, it is possible to apply vision-based algorithms on virtual images simulating real conditions. The figure below shows a scenario where a I-AUV is tracking an amphora found on the seabed. In this example UWSim just renders the scenario and publishes on the network the image captured by the virtual camera. An external tracking algorithm, totally independent of UWSim, receives the image as if it came from a real camera, and performs tracking using its own libraries (e.g. OpenCV).

The output of the tracker could be used for feeding a control algorithm in order to stabilize the vehicle, perform grasping, etc. These controllers could be connected to a real robot or back to UWSim, thus closing the loop through an external architecture. [This video] shows a complete survey & intervention mission simulated in this way. Vehicle and arm controllers developed in an external control architecture send their outputs to UWSim that updates the virtual vehicle and arm accordingly, and sends back virtual sensor feedback to the real controllers.

Supervision/Playback of a survey

It is also possible to use UWSim for playback of a mission. For instance, the navigation data acquired during a survey mission with a real robot could be logged and then reproduced in UWSim in order to analyze the vehicle trajectory. If bathymetry and images of the seafloor are gathered during the survey, it would be possible to build a textured terrain from them and visualize it in UWSim. Therefore, it would be possible to obtain a virtual visualization of a real mission.

The video found [here] illustrates this concept. It shows a 3D visualization of a real survey task being carried out with an AUV at the CIRS water tank (Girona University), during the TRIDENT EU Project 1st Annual Review. The robot was commanded to autonomously perform a survey of the floor. At the same time, odometry and imaging data was published on the network (via ROS topics). This data was recorded and later used as input to UWSim. Both UWSim and the real robot could have been run at the same time, thus allowing to visualize the vehicle survey in real time. As UWSim also supports the visualization of external video, it is possible to include the real video stream on top of the virtual scenario.